分类: 服务器与存储

2008-03-10 13:41:09

|

In DMX's corner Vincent Hilly, managing director, data administration at Depository Trust & Clearing Corp. (DTCC) Business: Protecting financial trade information Scale: Hundreds of terabytes in production Challenge: Span multiple sites with heavy replication loads Major consideration: Operate in a highly regulated environment Array choice: EMC Corp. Symmetrix DMX-3 Key criteria: "I needed unquestionable scale, performance and reliability." Selling point: "EMC's maturity in advanced replication beats anyone." In the future: Use DMX-3 to expand tiering strategy In USP's corner Lance Smith, managing director of storage services at Atos Origin Business: Providing on-demand global IT services Scale: Nearly 1 petabyte in production Challenge: High-growth heterogeneous customers, multiple sites Major considerations: "Reducing cost for storage services without sacrificing reliability." Array choice: Hitachi Data Systems (HDS) Corp. USP1100 Key criteria: Rapid provisioning, heterogeneous migrations Selling point: "HDS promised scalable production virtualization and they delivered." In the future: Use USP as cornerstone for on-demand storage and information lifecycle management strategy |

||||||

Ask any senior IT executive in a large enterprise to describe their "storage strategy" and you probably won't hear about their fabric architecture or storage management software--and you certainly won't hear about their information lifecycle management strategy. The IT brass will most likely want to talk about the tier-one storage array they deploy. In the rarefied air of multihundred terabyte or multipetabyte tier-one production environments, the storage array is the anchor for storage decision-making. With just four words, "We're a DMX shop" or "We're a USP shop," a storage architect telegraphs a wealth of information about their entire storage strategy.

These top-of-the-line multipetabyte behemoths are much more than mere physical storage devices. In a very real sense, the EMC Corp. Symmetrix DMX-3 and the Hitachi Data Systems (HDS) Corp. TagmaStore Universal Storage Platform (USP) 1100 are bellwethers for what the storage industry will deliver in coming years, with each platform showcasing not only the most advanced capabilities of EMC and HDS, but their philosophy about how IT teams should deploy, manage, grow and protect their infrastructures. EMC and HDS are locked in a fierce battle for control of the data center that could shape the broader enterprise IT infrastructure for years to come.

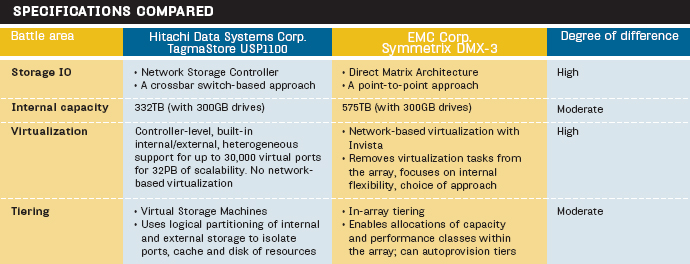

At first blush, a direct comparison between DMX and USP seems easy enough: Both vendors leverage cache extensively across a multicontroller architecture, claim petabyte (PB)-range scaling and millions of aggregate IOPS, support many combinations of disk types, and offer clear paths to storage consolidation and virtualization. However, as many prospective customers who have looked at both arrays in detail can attest, making a fine-grained "apples-to-apples" comparison between EMC DMX-3 and HDS USP1100 can be exceedingly difficult, even for experienced storage managers.

The reason it's difficult to directly compare the two arrays is that their core architectures are so distinct. Understanding these differences in design approach and philosophy is at the very core of understanding not only DMX-3 and USP1100, but the strategic trajectories of EMC and HDS.

DS USP1100

EMC DMX-3

The DMX-3 is EMC's flagship product in the

Symmetrix family, and reigns as the largest high-performance storage

array on the market, besting the internal capacity of the HDS USP with

its raw capacity of 575TB for open-systems environments (again,

assuming 300GB drives, using up to nine physical bays). The DMX can

deliver from 32GB/sec to 128GB/sec of data bandwidth, support up to

64,000 logical devices, and can scale from 96 to 2,400 drives online.

It all adds up to a very compelling capacity consolidation story.

IO, memory and performance

The HDS pitch: The USP is based on a very powerful crossbar

switch architecture that separates control data and memory within its

storage controllers. When combined with our approach to cache memory

optimization, this networked storage controller makes the USP much more

high performing than the DMX-3.

The EMC pitch: The DMX-3's point-to-point memory architecture delivers very high aggregate IO that can be fine-tuned for scalability and the highest possible performance across real-world workloads. The USP can't match this performance.

The real issue: Both companies have markedly different technological approaches to handling IO and memory. These differences provide the wiggle room to bash each other, encouraging users to question the other company's fundamental design principles.

HDS approach

Without question, HDS places more emphasis on its controller

architecture innovations than any other vendor in the market. As a

result, any prospective customer evaluating HDS today must become

comfortable with the company's explicit and unapologetic

controller-centric view of enterprise storage intelligence, which is

extremely different from the DMX-3.

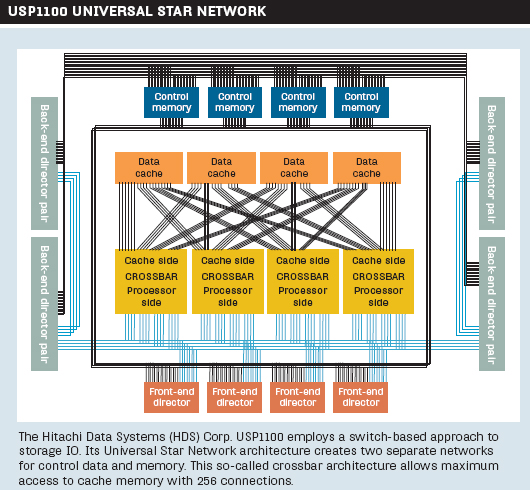

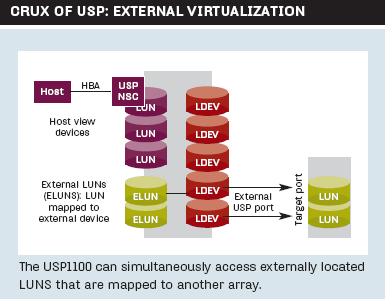

At the core of the HDS USP1100 is the Hitachi Universal Star Network architecture, a massively parallel crossbar switch (see "USP1100 Universal Star Network," below). The key distinction of this controller, and one of the core design innovations enabling the entire HDS storage strategy, is that it creates two separate networks for control data and cache memory. There are actually four crossbar switches in the controller dedicated to providing nonblocking access to cache memory. In concert with this network, a smaller parallel network manages control data on a point-to-point network communicating between the MIPS RISC processors in the controller and the control memory. The crossbar multiplexes across 16 paths connecting the processors to the cache modules. This twin network of control data and cache plays a central role in far-ranging duties not just for the USP box, but for HDS' wider SAN strategy, including controller-based virtualization, heterogeneous replication and logical partitioning.

A range of algorithms are applied against this network controller

architecture to provide cache optimization based on workload or dataset

selection for permanent cache residency. The result of this extremely

optimized architecture is the ability to transform the storage

controller into a real-time, in-band IO platform for extremely large,

mission-critical environments that's also capable of pervasive internal

and external virtualization.

|

||||||

EMC approach

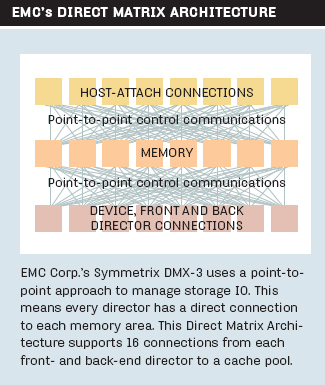

The DMX-3 sports a point-to-point Direct Matrix Architecture (see

"EMC's Direct Matrix Architecture," at right), which enables very

scalable performance. It's a critical element in the DMX story, but

definitely not positioned as the crown jewel of the platform the way

HDS touts its Universal Star Network architecture. The Direct Matrix

connects all points between every front- and back-end director and

their cache boards running on 1Gb/sec connections, not a crossbar

switch like the USP. Because EMC scales performance in the DMX by

providing direct point-to-point connections between every front- and

back-end director and their cache boards, the company emphasizes that

this architecture enables it to add more memory and capacity to support

custom performance for different tiers. EMC leverages PowerPC chips

rather than MIPS RISC processors.

One key EMC customer happily agrees that the DMX design works. "I can trust EMC's approach to scaling performance," says Vincent Hilly, managing director, data administration at Depository Trust & Clearing Corp. (DTCC), a financial services provider with a multihundred terabyte, multisite replicated environment.

|

| ||

Performance face-off

Users familiar with both systems in production environments say the

arrays provide more than adequate levels of performance under the vast

majority of operating environments. However, because EMC and HDS can

hammer on each other's component-level architectural differences,

they'll do so (see "Point, counterpoint," PDF at right). For example,

HDS claims the DMX suffers from IO congestion because of a mixture of

control and data transfers--an attack on EMC's point-to-point

architecture. EMC counters that the USP suffers from significant

latency issues because it needs to manage its separate control and data

memory networks, indicting HDS' switch-based approach.

| Point, counterpoint | ||||||

| Click here for a Q&A on EMC DMX-3 vs. HitachiUSP1100 (PDF). | ||||||

The reality is that a successful, high-scale, high-performance storage array can be built on either a switch-based or point-to-point-based architecture. Outside of a specific usage context, these are red herring technical arguments. Congestion and latency issues may or may not surface in the way memory is handled by the two architectures. Any competitive attacks that hark back to the controller or memory issues of the USP or DMX must be viewed in context of workload, deployment, memory optimization, connectivity and scale. Key words to listen for in a sales call are "congestion," "latency," "commingled" and "contention." Used in isolation, not in context, they're just words that indicate generic issues of an architectural approach, not a de facto limitation of the USP or DMX.

Capacity and scaling

The HDS pitch: A common approach to growing both internal and

external capacities is the path to the highest possible scalability and

flexibility, and USP is the only platform that can provide this. EMC

doesn't even have a story for scaling outside the DMX itself.

The EMC pitch: The DMX-3 can scale to 1.1PB inside the array, and support the highest levels of flexibility for creating internal storage tiers at those scales. This saves money and management costs. Scaling the IO for production workloads outside of the array isn't a realistic option.

The real issue: Both

arrays have demonstrated comparable internal scaling capabilities. The

DMX-3 has higher absolute internal capacity, but the USP1100 can scale

externally to support multipetabyte capacities. The question related to

both arrays is, at what price-to-performance ratio are these numbers

achieved?

|

||||||

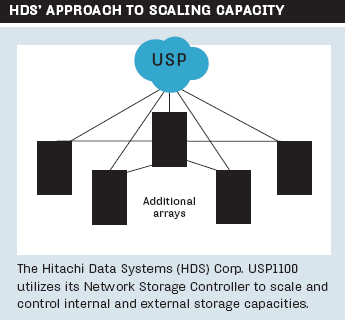

HDS

For anyone unfamiliar with the HDS controller-centric approach, the

32PB capacity claim can be confusing. What's behind this massive number

is that the Universal Star Network architecture within the USP (and its

freestanding deployment as HDS' TagmaStore Network Storage Controller

line outside of the array) can establish virtual ports within and

outside the array, providing a common, centralized controller-based

means of scaling the entire system to 32PB, including internal (332TB)

and external storage (see "HDS' approach to scaling capacity"). EMC is

quick to note that HDS can't produce a customer who's even close to

that level of production deployment with the USP. In any case, it

demonstrates an important theoretical point that HDS seeks to

emphasize: Its controllers are powerful and, over time, they'll

continue to support increased levels of storage.

"We are absolutely using the USP for virtualization of production workloads," says Lance Smith, managing director of storage services at Atos Origin's North America Data Center Services in Houston.

Today, HDS articulates a scaling story for USP that

shows tiering strategies and TCO benefits to be gained from

comparatively less-expensive arrays being controlled by the software

intelligence driving through the USP. One strategic HDS customer, Paul

Ferraro, storage manager at wireless network company Qualcomm Inc. in

San Diego, is using HDS "to drive tiering across multiple arrays in

several areas, including backups, staging, as well as for our database

customers."

EMC

Listening to EMC customers, one consistently clear and distinct

advantage that comes up is the company's reliability for performance at

scale. "DMX is where we looked to establish our highest quality of

service for our customers," says John Halamka, CIO at CareGroup Health

System and Harvard Medical School. "We can reliably run all of our

mission-critical production servers back to the DMX platform."

The DMX-3 provides a wide range of support for drive type, speed and size configurations, as well as for performance-to-capacity tuning. This is a platform designed with the largest Fortune 500 mission-critical environments in mind. In the unlikely event a customer chose to pack a single DMX-3 with its absolute maximum drive capacity of 2,400 500GB drives, it could max out at 1.1PB inside the array. As such, EMC wins the theoretical in-array scaling story, hands down. The DMX line has also made some significant advances in automating the ease of online provisioning. When combined with the various drive types and speeds that are supported, it makes sense that EMC places so much emphasis on physical consolidation: The company has a viable story for supporting it.

What to consider

At a technical level, the USP and

DMX-3 execute their scaling functions very differently, but both

approaches are highly automated for multiple disk types. More

importantly, the ultimate scalability difference between the two arrays

has everything to do with the customer's own strategy for scaling: Does

the storage infrastructure demand purely internal capacity, or a mix of

internal and external scale? How does the scaling strategy align with

one's tiering strategy and infrastructure workflow?

Virtualization

The HDS pitch: Virtualization should be executed at the storage controller level. This provides the best ease-of-use and flexibility for

the infrastructure. Doing network-based virtualization like EMC proposes creates more complexity.

The EMC pitch: Customers demand multiple approaches to virtualization and EMC will support this reality. Today, most of what HDS claims as benefits of virtualization can be accomplished with other tools. The HDS approach to controller-based virtualization is too rigid.

The real issue: HDS is married to a

controller-based strategy for its USP. Customers have to embrace this.

EMC redirects today's virtualization requirements toward in-box DMX

flexibility and tools while its network-based approach matures.

|

||||||

HDS and virtualization

HDS has a very clear recommendation for storage virtualization: Do

virtualization at the storage controller level, period. Because of its

ability to virtualize heterogeneous, external arrays, HDS promotes the

USP's ability to create very flexible storage tiers and easily move

data. Prospective customers need to understand the implications of this

approach because how and when they decide to virtualize may well become

a determining factor in deciding between EMC and HDS.

Certainly, virtualizing at the controller level with USP can provide some distinct advantages. "With the USP, we are able to get our provisioning for new capacities down to under four hours for one terabyte, as well as maintain set utilization levels across the entire tier," says Atos Origin's Smith. "This lets us begin storage forecasting ahead of demand."

For heterogeneous storage shops like Atos Origin, the controller-based virtualization approach of the USP makes great sense because it plays nice with all major vendors. Additionally, for a multihundred terabyte production environment like Atos Origin, where data migrations and rapid provisioning are critical activities, the simplicity of a consolidated management approach with the USP makes great sense.

EMC's virtualization

The word

"virtualization" has many meanings in the increasingly broad world of

EMC. The company has embraced a de facto "portfolio" approach to

virtualization technologies, encompassing all levels of the

infrastructure: hosts (VMware), network (Rainfinity for files and

Invista for blocks) and, obviously, internal virtualization within the

arrays themselves. All indications are that the one layer of the

infrastructure EMC will likely skip is storage controller-based

virtualization akin to the HDS USP.

| New technology directions | ||||||

|

Both

EMC Corp. and Hitachi Data Systems (HDS) Corp. are tight-lipped when it

comes to discussing the roadmaps of their flagship arrays. However, the

two companies are willing to hint at some very interesting new features

that may show up in the next two years.

Advanced capacity optimization: The ability to reduce the amount of data resident on the production storage array is a key issue that users are calling on vendors like EMC and HDS to address. Expect to see either or both of these companies talk about ways to reduce the footprint of their production data stores, increasing the effective capacities of their arrays. Timeframe: 24 months Advanced data protection and recovery: Both EMC and HDS are interested in fine-grained data capture functionality for their highest-performing arrays. Expect both companies to add advanced image making (e.g., snapshot) and replication capabilities that take advantage of the respective capabilities of their products. Timeframe: 12 months Advanced security and encryption: Data security is now a paramount issue for both EMC and HDS. The companies are likely to make high-profile announcements around their ability to provide data-at-rest encryption capabilities. Timeframe: 12 months |

||||||

What to consider

The one indisputable fact is that storage managers must either entrust

their storage array controller to handle virtualization (HDS USP) or

believe that a variety of virtualization tools, including a

network-based virtualization appliance, will deliver the goods (EMC

DMX-3). Once again, there's no middle ground. Whatever approach you

eventually take, expect a high degree of vendor lock-in.

The ROI battle

The HDS pitch: The economic benefits of the USP are

significant because of its flexibility. Customers can create highly

optimized internal and external tiering strategies with USP, far beyond

what's possible with a DMX-based approach.

The EMC pitch: The DMX enables massive consolidation on a single platform, with ongoing operating efficiencies as a result. HDS can't provide the single-platform footprint that DMX does to achieve these goals.

The real issue: A user's own approach to scaling, storage management and consolidation will point them to either the DMX-3 or USP1100.

HDS economics

The TCO and ROI story HDS uses to sell the USP1100 is based very much

on the inherent virtualization capabilities of the platform itself. HDS

is now selling diskless versions of its Network Storage Controller,

which shows that the firm is serious about ultimately riding commodity

price curves for all disk types and extending its virtualization

strategy as widely as possible into all tiers.

However, in 2007, the reality of tier-one production storage is that tight integration with memory schema and disk architectures isn't disappearing any time soon.

DMX economics

EMC's positioning for DMX's ROI is based

largely around the direct benefits derived from consolidating storage

onto a single, flexible, high-performance platform. The story resonates

particularly well with customers in the multihundred terabyte range who

are sophisticated enough to take full advantage of the breadth that DMX

can consolidate across multiple tiers and multiple locations.

What to consider

The USP1100 and DMX-3 are massively capable,

high-performance arrays. However, as our head-to-head comparison has

borne out, what's most important is how the architectures of the two

arrays define the respective strategic directions of EMC and HDS. HDS

is unabashedly storage controller-centric and that paradigm is a first

principle around which all else follows. EMC is now a global IT

powerhouse with a vast portfolio of options that can satisfy any

imaginable user configuration. These two realities shape product and

strategy. Thus, thinking of USP1100 vs. DMX-3 in a vacuum isn't

prudent, because they're simply anchors of entirely different

approaches to growing, managing and extending the IT infrastructure.

Only after a detailed and arduous review should a user be ready to

volunteer the information that "I'm a USP shop" or "I'm a DMX shop."

Those are four words that will echo through the data center for years

to come.